Prover-Verifier Games improve legibility of language model outputs

Making sure that language models produce understandable text is crucial to making them helpful for people, especially when dealing with complex tasks like solving math problems.

We found that when we optimize the problem-solving process of strong models solely for getting the correct answer, the resulting solutions can become harder to understand. In fact, when we asked human evaluators with limited time to assess these highly optimized solutions, they made nearly twice as many errors compared to when they evaluated less optimized solutions. This finding highlights the importance of not just correctness, but also clarity and ease of verification in AI-generated text.

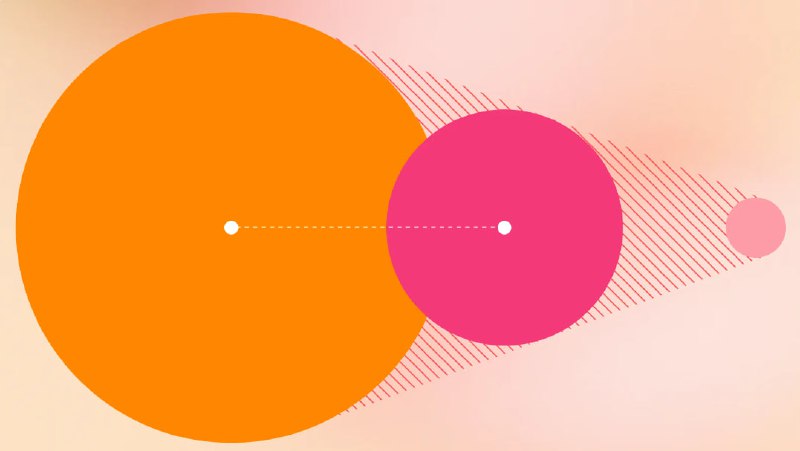

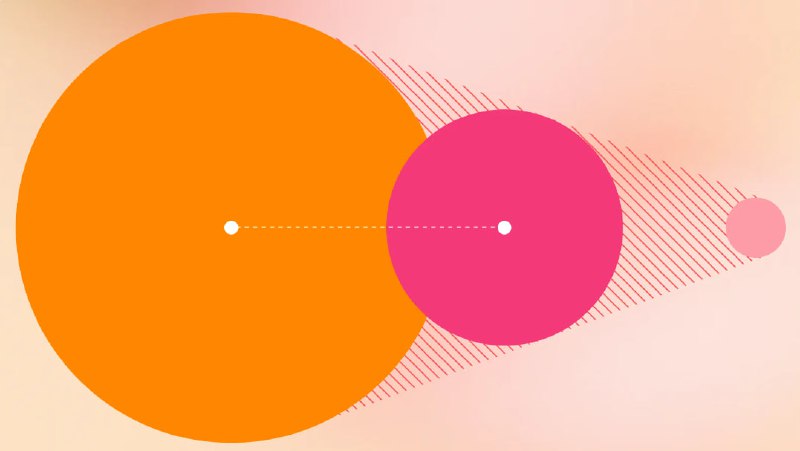

By training advanced language models to create text that weaker models can easily verify, we found that humans could also evaluate these texts more effectively – a process we call improving legibility.

This is where prover-verifier games come into play.

Making sure that language models produce understandable text is crucial to making them helpful for people, especially when dealing with complex tasks like solving math problems.

We found that when we optimize the problem-solving process of strong models solely for getting the correct answer, the resulting solutions can become harder to understand. In fact, when we asked human evaluators with limited time to assess these highly optimized solutions, they made nearly twice as many errors compared to when they evaluated less optimized solutions. This finding highlights the importance of not just correctness, but also clarity and ease of verification in AI-generated text.

By training advanced language models to create text that weaker models can easily verify, we found that humans could also evaluate these texts more effectively – a process we call improving legibility.

This is where prover-verifier games come into play.